The tech landscape is evolving and AI avatars are transforming how people interact with digital systems. At Anam, we're at the forefront of this revolution. But what makes our avatars feel genuinely human? Let's dive into the fundamental elements that bring our real-time AI avatars (or as we like to call them, personas) to life, with exclusive insights from our CTO and Co-Founder Ben Carr on how we approach this fascinating technical challenge.

Our Journey: From Pixels to Digital Humans

What began as simple computer-generated images has evolved into sophisticated digital humans capable of natural conversation. Our journey at Anam represents one of the most fascinating intersections of artificial intelligence, computer graphics, and human psychology.

Our story begins with Ben Carr, our CTO and Co-Founder, early exploration of Generative Adversarial Networks (GANs) about a decade ago. "This was long before Gen.ai was called Gen.ai," Ben recalls. "Back when GANs were just coming out. This was a huge breakthrough in the ability to generate images at the time."

After working at Synthesia, a company pioneering avatar technology with photorealistic (but non-real-time) avatars, CEO and Co-Founder, Caoimhe Murphy alongside Anam’s CTO and Co-Founder, Ben Carr, left to build Anam with a vision to create more accessible, natural conversational AI personas.

The Human Inspiration Behind Our AI Personas

Interestingly, the inspiration for Anam came from a deeply human place. Ben recounts visiting his grandmother, who spent considerable time talking to voice assistants:

"The way she was talking to Alexa was different from the way we would talk to Alexa. She was looking for a bit more of a connection and Alexa was low EQ. Alexa wasn't able to provide that for her and it was just very jarring to see."

This observation sparked our founding mission – to create something more accessible and natural, AI personas you could talk to in natural language and get natural responses back - mimicing how humans have communicated for millennia. Technical solutions emerge from deep empathy, and this human moment became the catalyst for our entire approach.

The Four Core Elements of Human Conversation

When we started exploring avatar realism at Anam, we quickly realized it's more than just pixels and algorithms. As Ben puts it, "At its heart, the core problem is nothing short of reverse engineering human conversation." This is now our technical North Star — guiding us through an extraordinarily complex challenge, shaped by millennia of subtlety, nuance, and deeply embedded social interactions.

From "Avatar" to "Persona": A Pivotal Shift in Our Thinking

Early in our journey, we found ourselves wrestling with terminology. "Avatar" – the industry standard term – increasingly felt too technical, too distant from what we were actually creating. It emphasized the visual representation but missed the deeper essence of what makes conversation meaningful. As we pushed deeper into creating natural interactions, we deliberately shifted to using "persona" instead.

This wasn't just semantics – it represented a fundamental shift in our thinking. "Persona" captures the personality, character, and human-like qualities we're striving to embody. It acknowledges that we're not just building visual representations but creating entities with distinct conversational styles, emotional responses, and interaction patterns. This subtle linguistic pivot has profoundly shaped our technical approach, pushing us to prioritize the elements that make communication feel genuinely human rather than merely realistic. Every time we say "persona" instead of "avatar," it's a reminder that our north star is a human connection, not just visual fidelity.

Let's break down the essential components we focus on daily:

1. Visual Fidelity: The Face of Our Digital Humans

Visual quality is the most immediately noticeable aspect of any Real-Time Persona and a key focus of our development. We've learned this can be broken down into three critical dimensions:

- Image Fidelity: How realistic does a still frame look? Are there artifacts, resolution issues, or unnatural elements?

- Motion Dynamics: How natural do movements appear? We've found that too-perfect linear motion feels robotic.

- Expressivity: Does the avatar show appropriate facial expressions, eye movements, and micro-expressions?

"If one part of that is lacking, then the whole experience is in the bin," notes Ben, highlighting why we're so obsessed with getting every visual element right.

At Anam, we've pioneered what's called "full frame generation," where our AI models render every pixel you see. This approach gives us greater control over expressivity and head motion but requires sophisticated AI models to avoid visual errors – a technical challenge we embrace daily.

2. Voice Synthesis: How Our Real-Time Personas Speak

Voice quality is absolutely critical to a compelling avatar experience, and it's an area where we've invested significant resources. "You can have the best face generation models in the world," says Ben, "but if the voice falls flat, the whole experience falls flat."

Through extensive testing and iteration, we've identified these key considerations for our Personas voices:

- Voice Selection: We carefully curate voices that match each avatar's appearance and purpose

- Natural Pacing: We incorporate pauses, variations in speed, and natural rhythm

- Latency and Throughput: We obsess over how quickly the voice responds and how consistently it performs

Our most realistic Real-Time Personas include natural pauses, stutters, and disfluencies - the imperfections that make human speech feel real. We've discovered these pauses also create critical opportunities for natural head movements and expressions.

Realism is in the details, and these subtle voice characteristics make all the difference.

3. The Brain: Our Approach to LLMs and System Prompts

The "brain" of our Real-Time Personas determines how they respond, what personality they express, and how they process information. This is where our work with Large Language Models (LLMs) and system prompts becomes crucial.

"Your system prompt is your only real handle on how the persona is going to behave or how they're going to respond," Ben explains. We've developed a specialized approach to prompt engineering that defines personality, knowledge, and conversation style.

When crafting system prompts for our Real-Time Personas, we focus on:

- Keeping prompts concise for real-time performance

- Being specific and literal in instructions

- Encouraging natural speech patterns with pauses and disfluencies

- Iterating consistently to refine behavior

One of our key discoveries: instructing the LLM to include natural pauses and minor speech imperfections dramatically improves realism. "Adding a little bit of flaws and disfluencies would be my top tip," says Ben. "It sounds like a small, trivial thing, but it really does make a big difference to that immersion." This approach transforms how users perceive our technology – it becomes less about perfect outputs and more about authentic communication.

4. Interaction Design: How We Handle Conversation Flow and Interruptions

Human conversation includes complex turn-taking, interruptions, and real-time adjustments. Implementing these features in our Real-Time Personas has been one of our most fascinating technical challenges – and creates dramatically more natural interactions when solved correctly.

The Art of Listening: Our Speech-to-Text Approach

Central to every natural conversation is truly listening to what the other person is saying. For our AI personas, this starts with precise speech-to-text transcription – a component that's easy to overlook but absolutely critical for realistic interactions. We've discovered that transcription quality significantly impacts how "heard" users feel during conversations with our avatars.

"The audio coming in from an interruption is off to a bad start," Ben explains, highlighting the technical nuances of capturing speech during double-talk situations. Our systems need to brilliantly discern human speech even when our avatar is still speaking, just as humans do during natural conversation. This means sophisticated echo cancellation, fine-tuned voice activity detection, and expertly calibrated "end-pointing" (predicting when someone has finished speaking).

What makes our approach unique is how we handle those in-between conversational moments – the slight pauses, the moments of uncertainty, the subtle cues that indicate someone is thinking or about to speak. We've engineered our avatars to not just process words, but to engage in that beautiful conversational dance that makes human interaction so rich and meaningful. Our personas don't just hear – they listen with an attentiveness that feels remarkably human.

Our team has identified several critical hurdles in interruption handling:

- Echo Cancellation: We've developed specialized techniques for removing the avatar's voice from the microphone input when a user interrupts

- System-Wide Awareness: We've engineered our entire pipeline to ensure all components can immediately stop processing when interrupted

- Smooth Transitions: We're constantly refining how we create natural closing of speech rather than abrupt cutoffs

"When you interrupt, you want it to be really quick," notes Ben. "You want, when the user speaks, the persona to immediately shut up." This seemingly simple interaction represents months of technical innovation on our part, as we strive to make every conversation feel natural and responsive.

Our Technical Challenges: The Hard Problems We Love Solving

Latency: Our Constant Battle for Realism

One of our biggest technical challenges is latency. Humans can respond to each other in as little as 200 milliseconds - faster than conscious thought. This presents a significant technical hurdle that we tackle daily.

"Users don't like it if they have to wait more than a second or two," Ben explains. "Latency's difficult. Humans are actually incredibly good at responding quickly. We can actually respond faster than we can think, which is kind of weird."

Our approach to optimizing for low latency involves careful coordination of multiple systems:

- Advanced transcription systems for understanding user speech

- Optimized LLM response generation

- High-performance text-to-speech conversion

- Accelerated face animation generation

- Efficient network transmission

Every millisecond counts in our quest to make conversations feel natural, and we've made this a north star metric to bring our current latency down from 500 milliseconds to 200 milliseconds for our development team.

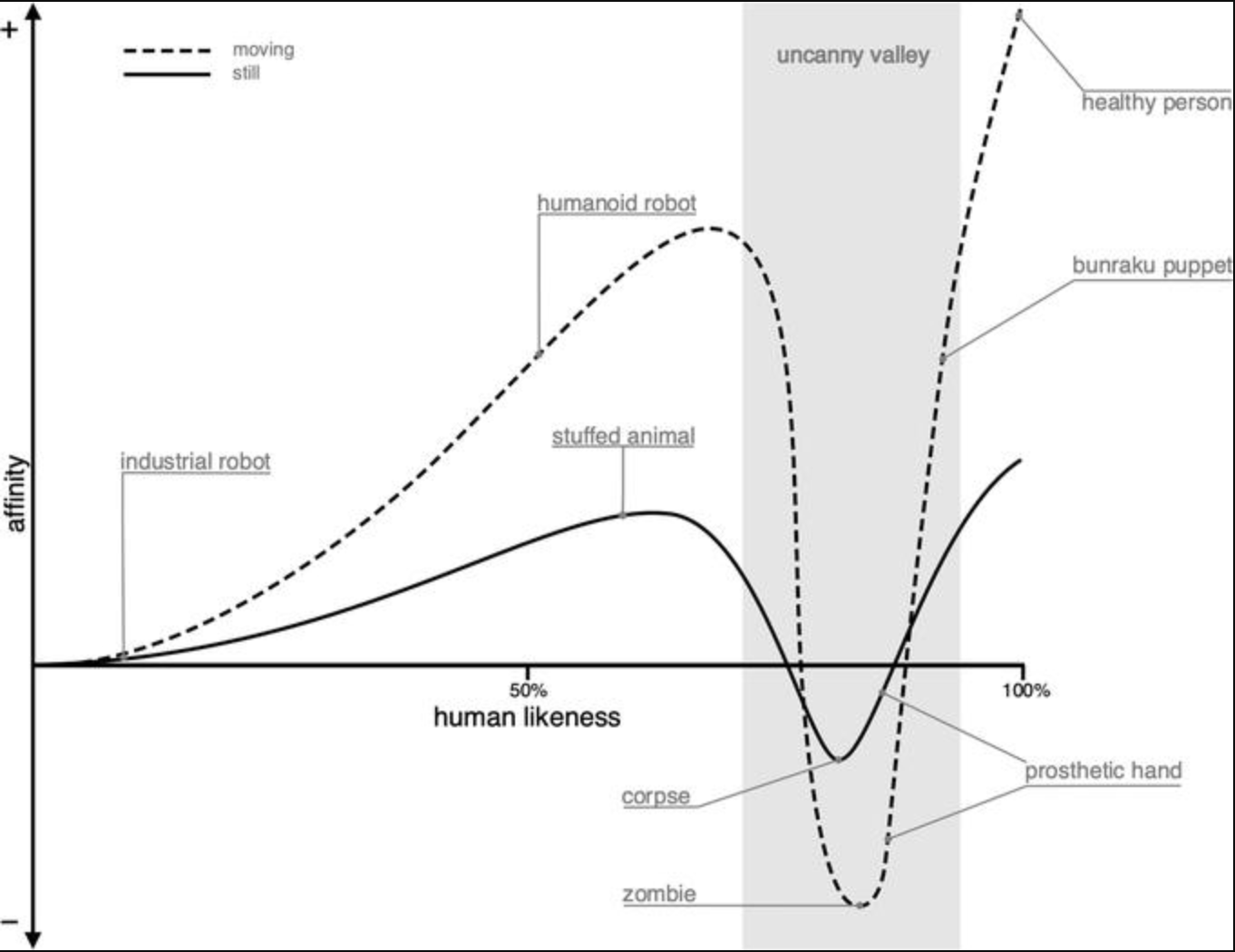

The Uncanny Valley: Our Mission to Cross It

Humans have evolved over hundreds of thousands of years to interpret facial expressions and body language. This makes everyone extremely sensitive to subtle errors in avatar appearance or behavior – a challenge we embrace.

"If you get them wrong, even if it's just a few pixels, you're shuffling around a few pixels wrong, it can throw the whole experience off, immersion's broken, and you're deep in non-canny valley," Ben explains. This is why we obsess over details that might seem insignificant but make all the difference in perceived realism.

Our Vision: The Future of Real-Time Personas

As we continue to refine our technology, we're excited about how our Real-Time Personas will transform interactions across various applications:

- Teaching assistants and tutors that adapt to individual learning styles

- Sales representatives that connect authentically with potential customers

- Customer service representatives that provide genuine human connection

- Companions for elderly individuals that offer meaningful conversation

- Brand ambassadors that embody your company's unique voice and values

- Health and wellness coaches that provide personalized support

Our goal isn't perfect technology, but technology that feels natural and creates meaningful connections. As Ben's grandmother demonstrated in that foundational moment for our company, there's a deeply human desire for technology that can provide genuine interaction. This human insight drives every technical decision we make.

Our One-Shot Innovation: The Next Big Element

Until recently, our personas were based on real actors and models captured in our studio. However, we've developed a groundbreaking "one-shot" method that allows creating custom personas from a single image.

"A single image is all you need," explains Ben. "This is a huge unlock because suddenly if you've got a very niche use case, you want a barista in a particular cafe with a particular apron on, something that's possible."

Let me walk you through why this is such a fascinating technical breakthrough. Traditional AI avatar creation involves extensive studio time with specialized equipment, multiple cameras, and hours of actor time capturing different expressions and movements. The post-production process alone could take days or even weeks, with costs running into thousands of dollars per avatar!

Our one-shot innovation completely transforms this equation. What used to take weeks now happens in seconds. What used to cost thousands now costs pennies. The technical magic happens through our specialized neural networks that can infer a complete 3D model and animation capabilities from just a single 2D image.

The speed-to-process difference is staggering – we're talking about a 1000x improvement in some cases. But the real excitement isn't just about efficiency or cost savings (though those are incredible benefits). It's about democratizing avatar creation. Now small businesses, educators, and creators of all kinds can build personalized AI avatars tailored exactly to their needs without enterprise-level budgets.

Every time I see someone light up when they create their first custom avatar in seconds, I'm reminded why we tackled this seemingly impossible technical challenge. It wasn't just about the algorithms – it was about understanding the human desire to create and communicate through technology that feels personal and authentic.

Our Philosophy: The Human Element in Digital Humans

What makes our Real-Time Personas compelling isn't just technical excellence but their ability to mimic the beautiful imperfections and nuances of human communication. We've discovered that the most realistic AI personas aren't those that are flawless, but those that incorporate the subtle pauses, expressions, and conversational rhythms that make human interaction so rich.

"When it sounds and looks too polished, that's also uncanny valley triggering," Ben emphasizes. "So adding a little bit of flaws and disfluencies would be my top tip."

As we continue to push the boundaries of AI avatar technology at Anam, our focus remains steadfast: creating digital humans that connect with people in ways that feel authentic and natural - not perfect, but perfectly human. Every technical innovation serves this deeply human purpose.

Creating Your Own Real-Time Personas: Our Tools and Approaches

We've designed our platform to make creating Real-Time Personas accessible regardless of your technical expertise. We offer two main approaches:

- Custom LLM Integration: Our more technical approach where developers choose which components they want to incorporate and have granular control

- Turnkey Solution: Our comprehensive option where we handle all aspects - transcription, LLM calls, text-to-speech, and face generation

"You can do a no-code solution where you just go into the lab... You can just press chat and there you go. You're talking to a custom persona," Ben explains. We've spent countless development hours making this process as seamless as possible, because we believe everyone should be able to create compelling Real-Time Persona without needing deep technical expertise.

.png)